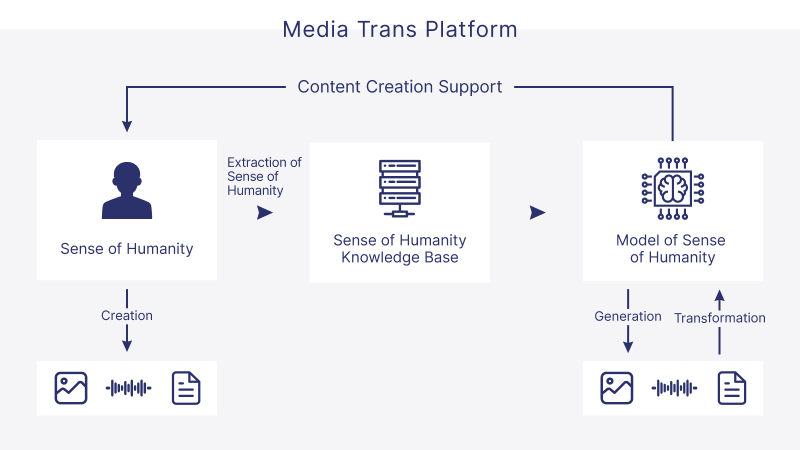

MediaTrans Platform

The human senses have enabled us to create a wide variety of works using media such as sound, images, and text. These include songs, paintings, photographs, movies, novels, and so on. By converting these human senses into data and creating models of human senses, we can not only aid human creativity, but also enable consumers to convert media content scattered on the Internet into various media and create new works of art. The rise of multimodal generative AI has made it possible to automatically generate media content across a variety of media, but we are considering the realization of a MediaTrans Platform that goes beyond generation and has the ability to freely transform human creative activities and media.

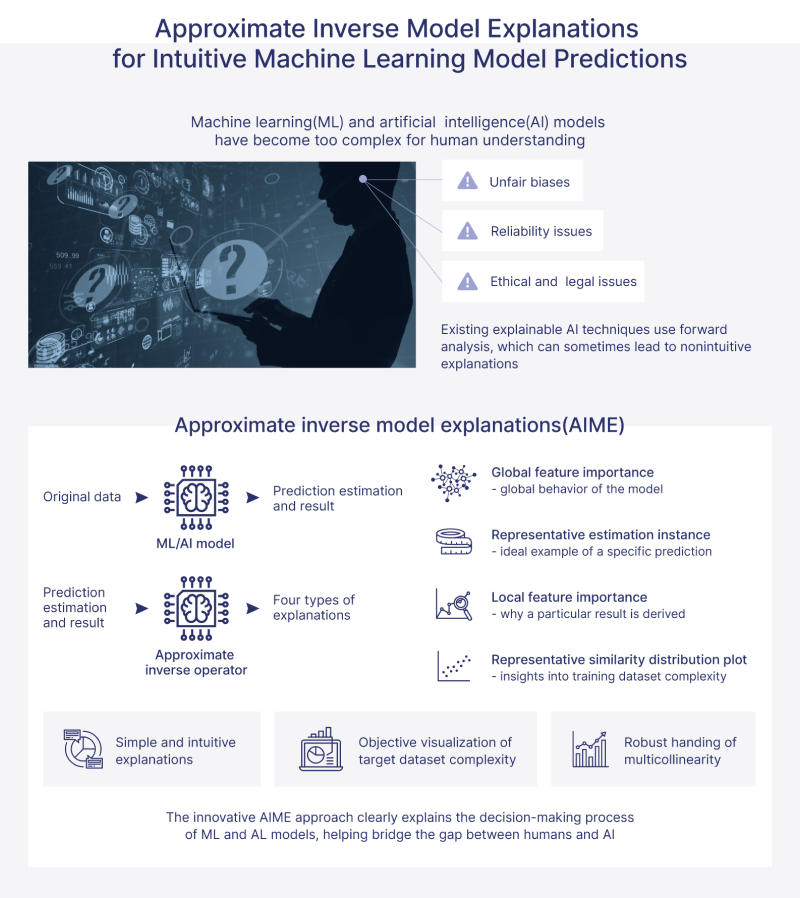

Explainable AI-AIME

Current complex AI and machine learning models are black box models, and even if it is possible to derive an estimated result for a certain problem, it is difficult to explain why such an estimated result was derived. The importance of Explainable AI (XAI) has been mentioned. In addition, AI is required to be fair, explainable, and transparent in the future, and we believe that XAI is one of the solutions to these problems from a technological aspect. We propose our original Approximate Inverse Model Explanations-AIME, which is less computationally expensive than other methods and provides simple and interpretable explanations by deriving approximate inverse operators of black-box models. This makes it possible to calculate global feature importance, which represents the behavior of the model as a whole, and local feature importance, which explains the basis of estimation for each data instance, making it possible to easily visualize the behavior. It is also possible to visualize whether the classification problem is difficult or easy.

In our seminar, we are not only studying the AIME algorithm, but also new applications.

Time-series Text Topic Extraction

Contents with time information are scattered all over the Internet. By extracting topics from these contents and visualizing them again in chronological order, we can confirm the trend of the topic. This allows us to know important aspects of an event, such as the turn of public opinion or a change in government policy.

Sign Language Recognition/Composition

Our goal is to create a global communication platform environment that enables various people around the world to solve the communication divide. For example, we believe that real-time recognition and synthesis is necessary for conversations between a signer and a spoken language speaker, including the emotions of both parties. We focus on sign language recognition and sign language synthesis, which can realize a medium for real-time communication of emotions by including not only simple hand gestures but also facial expressions, which are called NM expressions.

Other Collaborative Research

TransMedia Tech Lab promotes joint research with many companies.